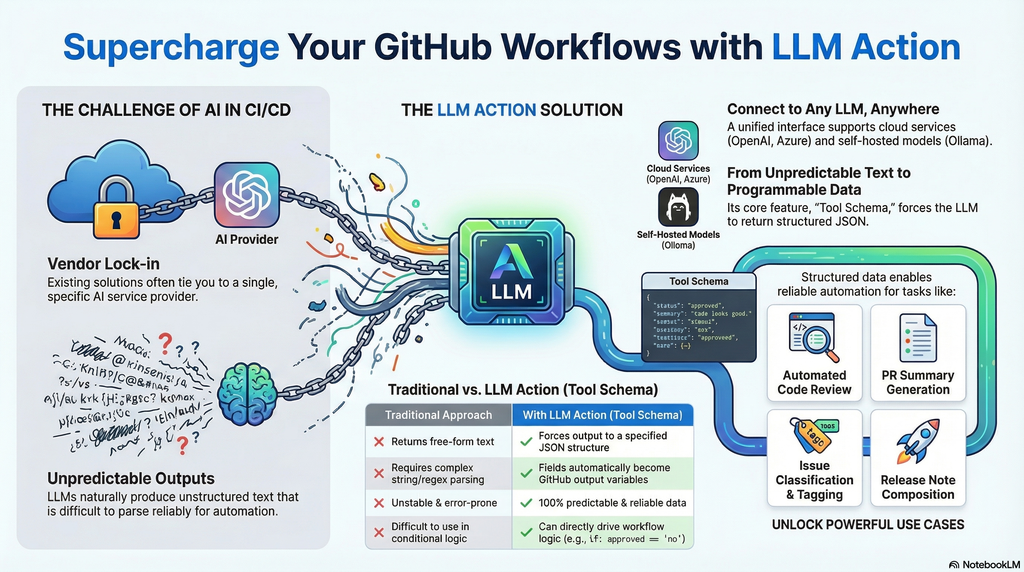

In the AI era, integrating Large Language Models into CI/CD pipelines has become crucial for improving development efficiency. However, existing solutions are often tied to specific service providers, and LLM outputs are typically unstructured free-form text that is difficult to parse and use reliably in automated workflows. LLM Action was created to solve these pain points.

The core feature is support for Tool Schema structured output—you can predefine a JSON Schema to force LLM responses to conform to a specified format. This means AI no longer just returns a block of text, but produces predictable, parseable structured data. Each field is automatically converted into GitHub Actions output variables, allowing subsequent steps to use them directly without additional string parsing or regex processing. This completely solves the problem of unstable LLM output that is difficult to integrate into automated workflows.

Additionally, LLM Action provides a unified interface to connect to any OpenAI-compatible service, whether it’s cloud-based OpenAI, Azure OpenAI, or locally deployed self-hosted solutions like Ollama, LocalAI, LM Studio, or vLLM—all can be seamlessly switched.

Practical use cases include:

- Automated Code Review: Define a Schema to output fields like

score,issues,suggestions, directly used to determine whether the review passes - PR Summary Generation: Structured output of

title,summary,breaking_changesfor automatic PR description updates - Issue Classification: Output

category,priority,labelsto automatically tag Issues - Release Notes: Generate arrays of

features,bugfixes,breakingto automatically compose formatted release notes - Multi-language Translation: Batch output multiple language fields, completing multi-language translation in a single API call

Through Schema definition, LLM Action transforms AI output from “unpredictable text” to “programmable data,” truly enabling end-to-end AI automated workflows.

[Read More]