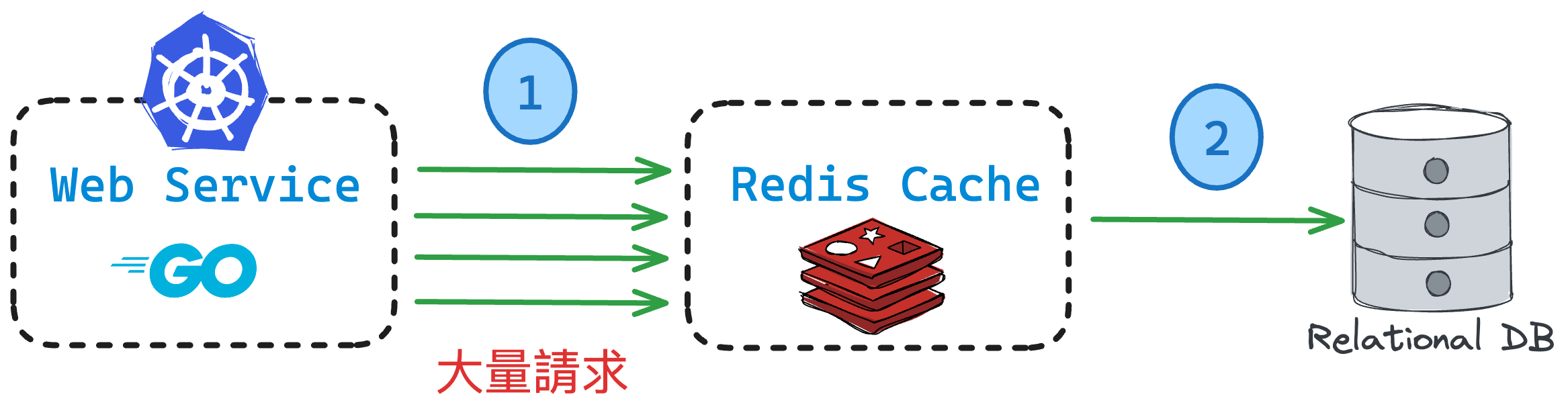

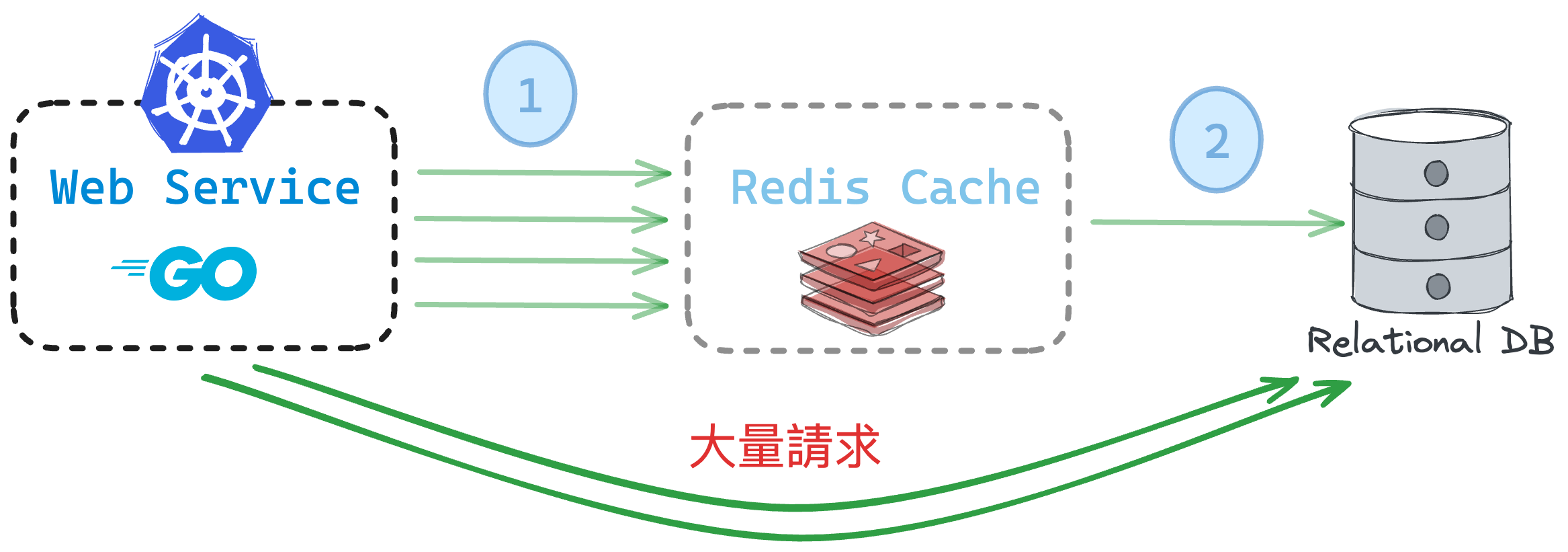

The diagram above illustrates a commonly used architecture in implementing web services, which involves adding a cache between the service and the database to reduce the load on the database. However, when implementing service integration, three major cache problems are often encountered: Cache Avalanche, Hotspot Invalid, Cache Penetration. Among them, Cache Hotspot Invalid is a very common issue. When the data in the cache expires or disappears, a large number of requests will simultaneously hit the backend database, causing an excessive load on the database and even leading to database crashes, as shown in the diagram where the cache key of a certain article expires. This article will introduce how to use the singleflight built into the Go language to solve the Cache Hotspot Invalid problem. This is a feature in the sync package that can prevent duplicate requests from hitting the backend database simultaneously.

Simulating Cache Hotspot Invalid

We will write a simple example to simulate the problem of Cache Hotspot Invalid. We will use the map function in Go language to implement a simple caching mechanism and store the data in the cache. This way, when fetching the same data again, we can directly retrieve it from the cache.

| |

Next, we will use goroutines to simulate multiple requests simultaneously fetching data from the cache, so that we can observe the problem of Cache Hotspot Invalid.

| |

Below is the execution result, where we can see 3 instances of “missing cache,” indicating that 3 requests simultaneously hit the backend database, while the remaining 2 instances are cache hits. This is the issue of Cache Hotspot Invalid. Ideally, we should avoid accessing the database more than once, which can effectively protect the backend database.

| |

Using singleflight to Solve Cache Problem

Next, let’s use singleflight to solve the problem of Cache Hotspot Invalid. This package can prevent duplicate requests from hitting the backend database simultaneously. The expected correct result should be as follows:

| |

We should only see one instance of “missing cache,” with the rest of the data being retrieved from the cache. Let’s see how to use singleflight to solve the problem. Below is the code for accessing the DB.

How should we modify the code to use singleflight to solve the problem of Cache Hotspot Invalid? Below is the modified code.

| |

As you can see, we have replaced the original db.cache.Set with the use of singleflight.Group to wrap it, thus preventing duplicate requests from hitting the backend database simultaneously.

In addition to using Do, have you ever considered what would happen if 100 requests simultaneously fetch data from the cache, but the database connection for reading data takes longer to process? However, we also want to set a timeout mechanism to avoid too many requests waiting indefinitely. In this case, we can use DoChan of singleflight.Group to solve this problem. Below is the modified code.

| |

In the main.go program, the changes should be as follows:

The result is as follows: we can see that req=10, 11 waits for more than 100ms, 110ms, and then returns nil directly.

| |

Understanding the Implementation of singleflight

After learning about using singleflight to solve the problem of Cache Hotspot Invalid, let’s take a look at the implementation of singleflight. Below is the code for singleflight. First, let’s understand the implementation of Do.

| |

This code snippet is from the Do method in the singleflight package. singleflight is a mechanism used to avoid executing the same work repeatedly. It ensures that only one execution is in progress for the same key, and if duplicate calls come in, the duplicate calls will wait for the original call to complete and receive the same result.

Let’s explain in detail the implementation of this Do method and the underlying concept:

- First, the Do method takes two parameters: key and fn. The key is used to uniquely identify different tasks, while fn is the actual work function that returns an interface{} and an error.

- At the beginning of the method, we can see that the code locks a mutex to ensure that there are no race conditions during subsequent operations.

- Next, the code checks whether the map g.m is empty, and if so, it initializes it. This map is used to store the call status for each key.

- Then, the code checks if there is already a call in progress for the same key. If so, it increments the count of duplicate calls, releases the mutex, and waits for the original call to complete. Once the original call is completed, the duplicate call will return the same result.

- If there is no call in progress for the same key, the code creates a new call status and adds it to the map, then releases the mutex.

- Finally, the code calls the doCall method to execute the actual work function fn. Once the work function is completed, the code returns the result and error, while checking for any duplicate calls.

The implementation of this Do method ensures that only one execution is in progress for the same key and correctly handles duplicate calls, ensuring that they receive the same result. This effectively avoids repeatedly executing the same work and saves system resources. The underlying implementation uses sync.WaitGroup to ensure that the same key is only executed once, while others wait.

Next, let’s take a look at the implementation of DoChan.

| |

This code snippet is from the DoChan method in the singleflight package. The DoChan method is similar to the previously mentioned Do method, but it returns a channel that will receive the result when it is ready.

Let’s explain in detail what the DoChan method does and how it differs from the Do method:

- The DoChan method also takes two parameters: key and fn. The key is used to uniquely identify different tasks, while fn is the actual work function that returns an interface{} and an error.

- At the beginning of the method, the code locks a mutex to ensure that there are no race conditions during subsequent operations.

- Next, the code checks whether the map g.m is empty, and if so, it initializes it. This map is used to store the call status for each key.

- Then, the code checks if there is already a call in progress for the same key. If so, it increments the count of duplicate calls, adds the new channel to the chans slice of the call status, releases the mutex, and returns the new channel.

- If there is no call in progress for the same key, the code creates a new call status, adds the new channel to the chans slice of the call status, adds the call status to the map, and finally releases the mutex.

- Finally, the code calls the doCall method to execute the actual work function fn. This method is executed in a new goroutine, allowing the DoChan method to immediately return the channel without waiting for the work function to complete.

In summary, the main difference between the DoChan method and the Do method lies in the return type: the Do method directly returns the result and error, while the DoChan method returns a channel that will receive the result when it is ready. This allows the caller to wait for the result without blocking and enables asynchronous processing. One thing to note is the line ch := make(chan Result, 1)—why is it set to 1? You can think about the reason for this.

Therefore, we use select to handle the timeout situation, which avoids too many requests waiting indefinitely.

singleflight (Generic)

In Go 1.18, the singleflight package has been added with support for generics, making it easier for developers to use the singleflight package. The original Go team member, bradfitz, also proposed this idea, which has not been implemented yet. Therefore, bradfitz created a package and placed it within the tailscale product, which can be used in other projects in the future.

| |

Below is the declaration and partial code, showing that the singleflight package now supports generics.

| |

Conclusion

The above code can be accessed here (including the Generic package). This article introduced how to use singleflight to solve the problem of Cache Hotspot Invalid. This is a feature in the sync package that can prevent duplicate requests from hitting the backend database simultaneously. In addition to using Do, we also introduced the usage of DoChan, which helps avoid too many requests waiting indefinitely. Finally, we also discussed the implementation of singleflight, making it easier for developers to use the singleflight package. It is believed that when encountering the problem of Cache Hotspot Invalid, developers can use singleflight to solve the issue.

References

- singleflight - GoDoc

- 缓存雪崩 Cache Avalanche 缓存穿透 Cache Penetration 缓存击穿 Hotspot Invalid

- redis - 快取雪崩、擊穿、穿透

- Go 語言使用 Select 四大用法