kubectl-ai is a revolutionary open-source project that seamlessly integrates Large Language Models (LLMs) with Kubernetes operations, enabling users to interact intelligently with K8s clusters using natural language. This article explores how this innovative technology addresses the pain points of traditional kubectl command complexity and significantly lowers the barrier to entry for Kubernetes users.

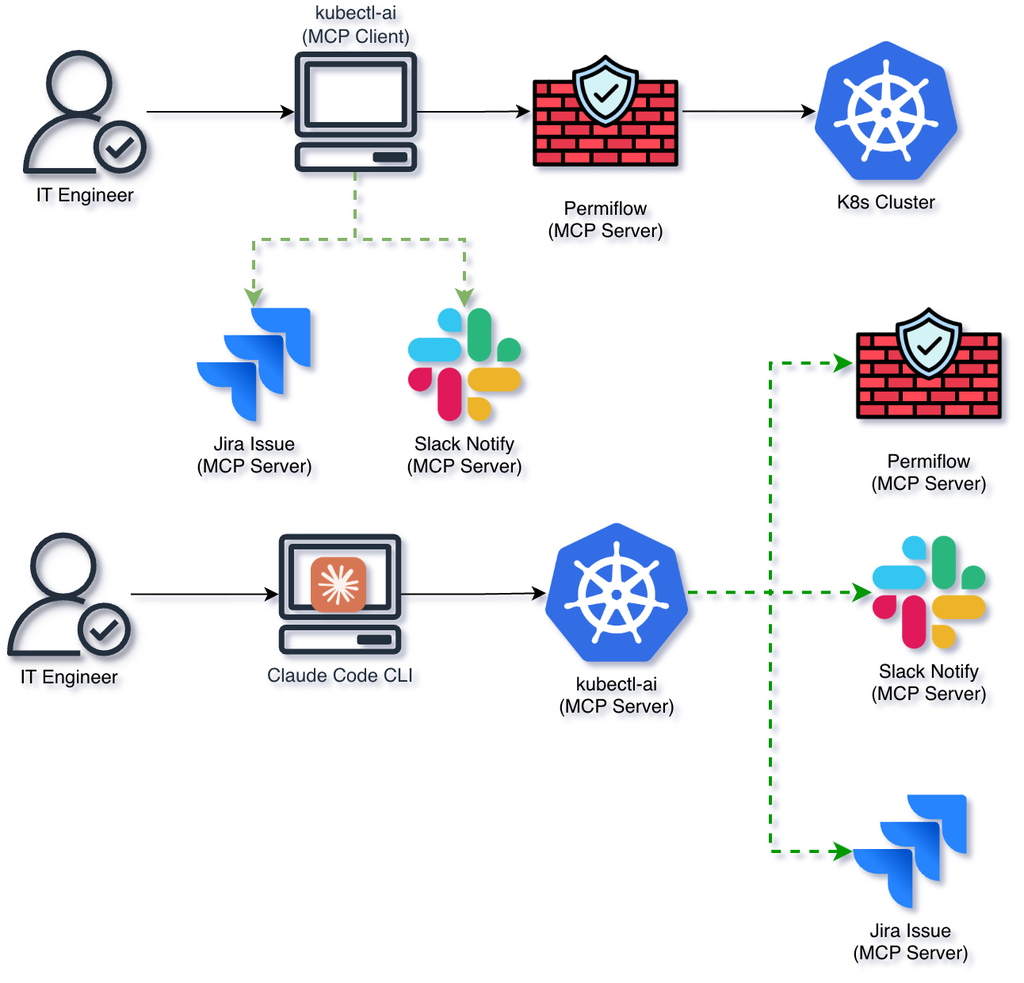

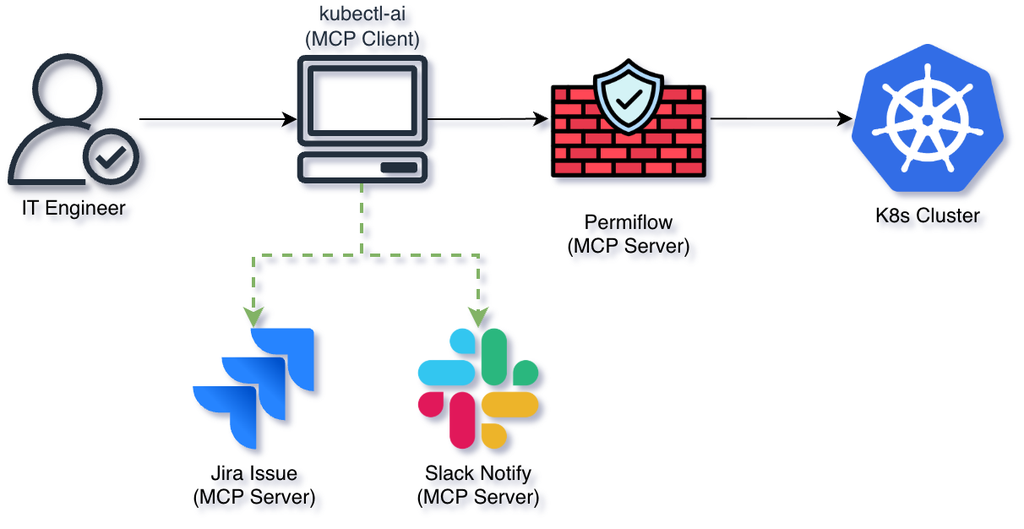

We’ll dive deep into kubectl-ai’s core architecture, including its Agent conversation management system, extensible tool framework, and innovative application of the Model Context Protocol (MCP). The tool supports multiple LLM providers—from Google Gemini, Anthropic Sonnet, and Azure OpenAI to locally deployed models—and features a dual MCP mode design (MCP-Server + MCP-Client).

KubeSummit Presentation Slides

This was my first time participating in the 2025 Taiwan KubeSummit Conference. The presentation slides have been uploaded to Speaker Deck and are available on Speaker Deck. The 40-minute talk covered the following topics:

- Why do we need kubectl-ai?

- Three kubectl-ai use case scenarios

- kubectl-ai’s Agent architecture breakdown

Let’s dive into the three powerful use case scenarios of kubectl-ai.

Three Major kubectl-ai Use Case Scenarios

1. K8s Problem Diagnostic Assistant

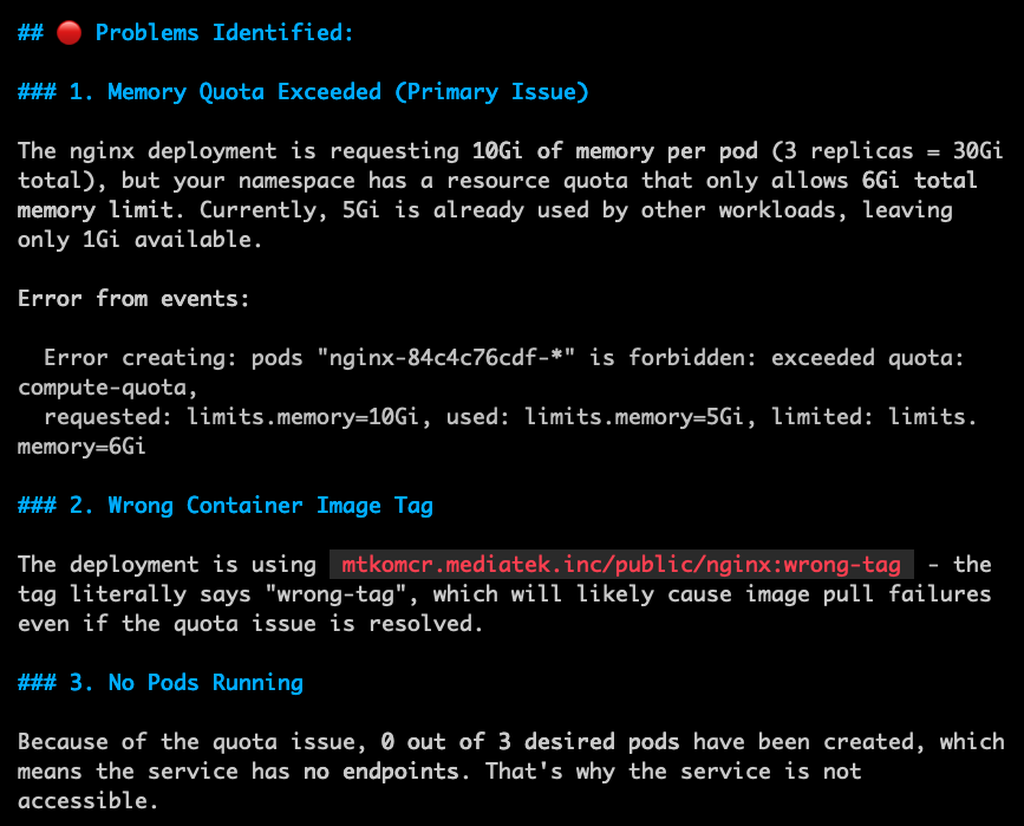

In day-to-day Kubernetes operations, encountering various errors and anomalies is the norm. kubectl-ai serves as an intelligent diagnostic assistant that can quickly identify issues and provide solutions through natural language problem descriptions. For example, users can ask “Why won’t Nginx start?” and kubectl-ai will analyze the cluster state and provide specific troubleshooting steps and recommendations.

Let’s use the following YAML example to create an Nginx Deployment.

| |

As you can see, the Deployment above contains two errors: first, the Nginx image tag is incorrect; second, the memory request value is too high. When we ask kubectl-ai “Why won’t Nginx start?”, it analyzes these configuration errors and provides specific solutions.

In summary, kubectl-ai as a K8s diagnostic assistant significantly improves operational efficiency and reduces troubleshooting time, allowing operations teams to focus more on core business needs. Of course, I know many will say that similar functionality can be achieved through Claude Code, but kubectl-ai is specifically designed for Kubernetes and can understand K8s operational mechanisms more deeply, providing more precise diagnostic recommendations. Beyond this, kubectl-ai offers two additional major features: MCP Server + MCP Client modes. Let’s explore these further.

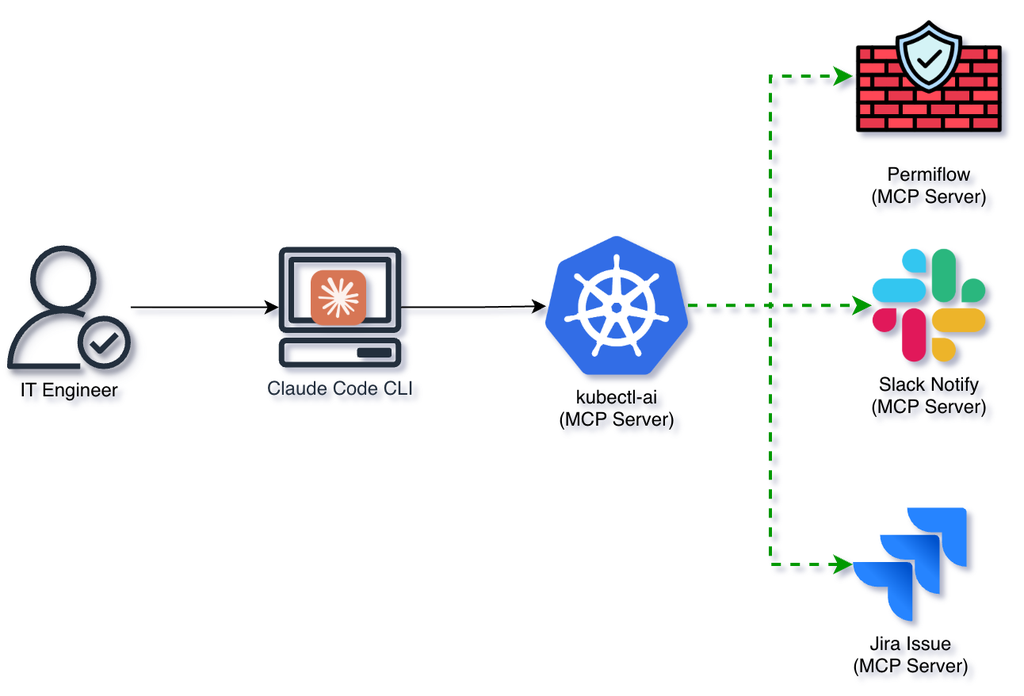

2. MCP-Server Mode: Extending LLM Capabilities

MCP-Server mode allows kubectl-ai to act as a bridge, integrating multiple LLM capabilities into Kubernetes operations. In this mode, kubectl-ai can invoke external LLM services such as Google Gemini, Anthropic Sonnet, or Azure OpenAI to handle complex natural language requests. You can start the MCP-Server with just one command:

Use the following command in Claude Code to connect to the MCP-Server:

| |

kubectl-ai provides two different toolsets:

- bash: For executing basic shell commands, suitable for handling simple tasks.

- kubectl: Specifically designed for Kubernetes operations, capable of generating and executing kubectl commands.

You can then interact with kubectl-ai through Claude Code or other MCP protocol-compliant clients. For example, you can enter “Please check the status of all Pods” in Claude Code, and kubectl-ai will invoke the backend LLM to generate the corresponding kubectl command, execute it, and return the results.

Beyond the above functionality, MCP-Server mode also supports custom tool extensions, allowing users to add new operational tools based on specific needs, further enhancing system flexibility and scalability. You can also connect multiple MCP-Server instances to implement more complex workflows and collaborative operations.

Add --external-tools to enable external tool support:

3. MCP-Client Mode: Multiple Services with One Command

Traditionally, when we want to scan RBAC security reports with kubectl, organize them, and send emails to supervisors or notifications via Slack, we typically need to write complex scripts to implement these functions. With kubectl-ai’s MCP-Client mode, these tasks can be accomplished with just one command.

For example, the following natural language instruction:

Scan RBAC permissions in the srv-gitea namespace, identify ServiceAccounts with excessive permissions, and create a Jira issue in the GAIA project with a summary of findings included in the description.

In this example, kubectl-ai automatically generates the appropriate kubectl commands to scan RBAC permissions in the specified namespace, identifies over-privileged ServiceAccounts, then uses the Jira API to create a new issue with a summary of the scan results included in the description. This not only simplifies the operational workflow but also greatly improves work efficiency.

Therefore, MCP-Client mode enables users to easily invoke multiple services and tools through simple natural language instructions, implementing complex workflows without writing tedious scripts, significantly lowering the technical barrier.

Conclusion

kubectl-ai brings revolutionary changes to Kubernetes operations through its innovative MCP architecture. Whether serving as an intelligent diagnostic assistant or extending LLM capabilities and simplifying operational workflows through MCP-Server and MCP-Client modes, kubectl-ai demonstrates its powerful practical value. As the Kubernetes ecosystem continues to evolve, kubectl-ai is poised to become an indispensable tool for operations teams, helping enterprises achieve more efficient and intelligent cloud-native operations management.